SCROLL

FEATURE

Cooling Strategies for Present and Future Data Centers

What are the practical power density limitations of an all-air-cooled data center HVAC system?

SCROLL

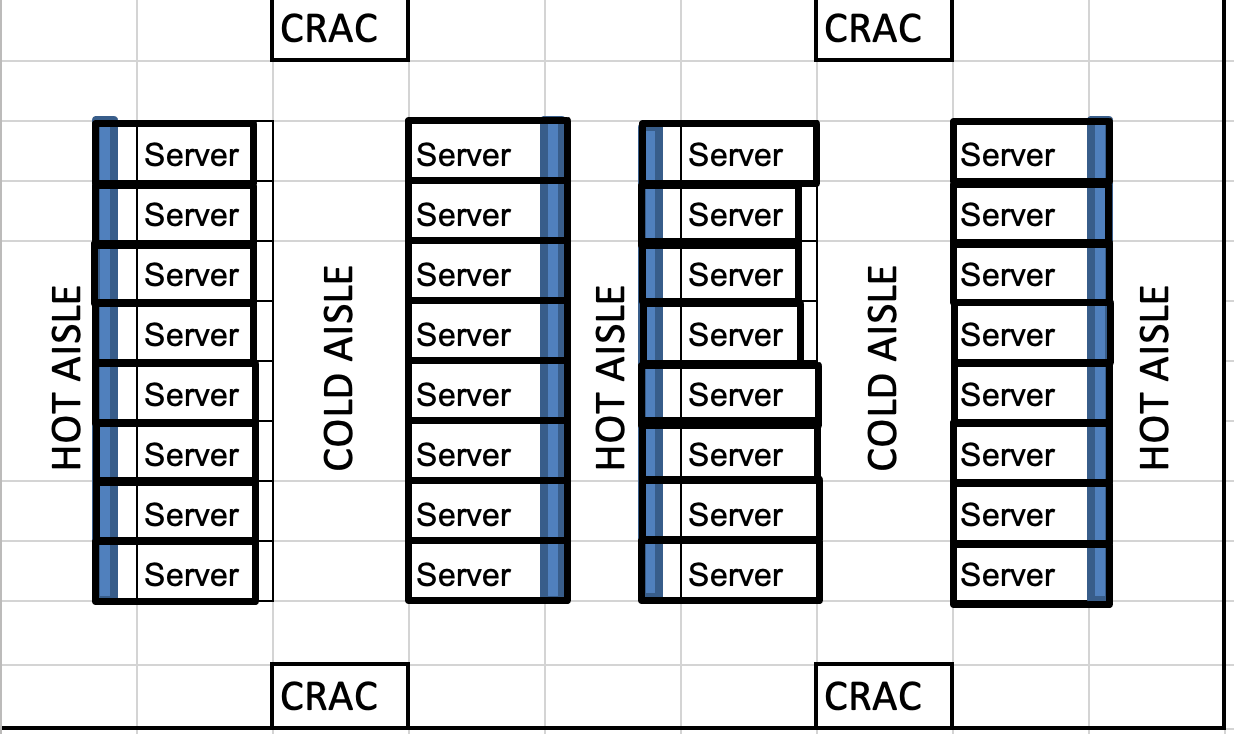

During the past 10-15 years, the average data center power density was stable (between 4-7 kW per rack) due to overall data center equipment and system efficiency improvements. With a 4- to 7-kW-per-rack power density, data centers were effectively conditioned with chillers and computer room air conditioner (CRAC) units with cold air delivered via raised floor or overhead supply air via air-handling units (AHUs) into a cold aisle/hot aisle configuration (see Figure 1). The cold air effectively removed the heat generated by the IT equipment.

However, there is a higher demand for larger and faster computational capacity to meet expanding informational technology requirements, which will result in data center rack power density increasing substantially in the future. Where a high power density was considered 10-12 kW per rack in 2016, it has risen to 25 kW per rack in 2023 with some data centers having a power density requirement of 50 kW per rack.

With the higher power densities, the following questions need to be answered: Can an all-air-cooling HVAC system meet the 25-50 kW per rack power density? What are the practical power density limitations of an all-air-cooled data center HVAC system? What is the most efficient and cost-effective mechanical cooling system to use for high power density data centers?

This article will define the different power density levels, address the above questions on high power density data centers, provide technical solutions for cooling high power density data centers, and discuss their future challenges.

Data Center Power Density

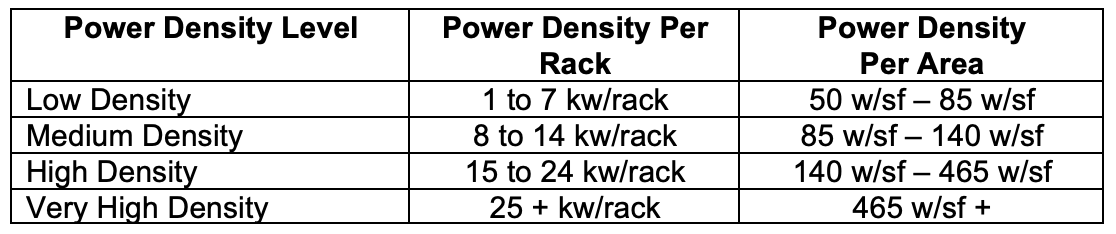

Depending upon the data center’s computational requirements, the power density requirements can vary greatly. Typically, power density is based upon energy load per rack and can range from 1 kW per rack up to 30-plus kW per rack. A data center’s power density is considered low, medium, high, or very high density (see Table 1).

TABLE 1. Data center power density.

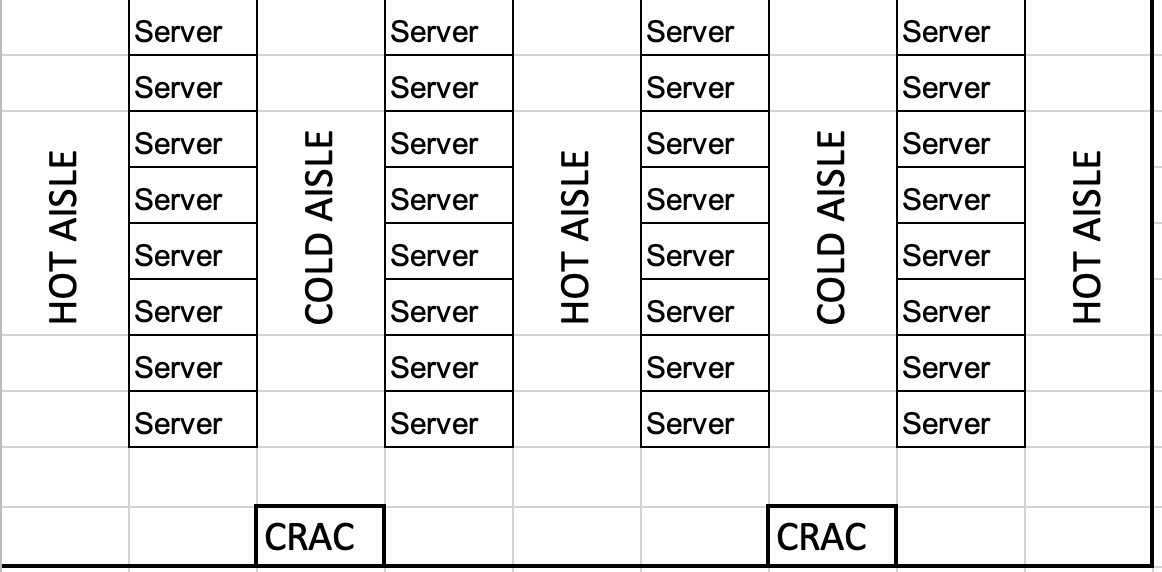

Lower Density Data Center Mechanical System Design

At the lower power densities (server rack power density of 1-7 kW per rack), 100% conditioned air systems can efficiently remove the heat generated by server racks. Looking at Figure 1, a typical lower power density data center has a raised floor with down flow CRAC units around the data center perimeter supplying cold air through a raised floor to the cold aisle and servers. The server racks are installed in rows with the server fronts facing each other and forming the cold aisle. The server rack backs face each other and form the hot aisle. The cold air enters through the cold aisle and is drawn into the server by the server fans, where it absorbs the heat rejected by the server components and discharges the hot air into the hot aisle. The hot-aisle air is drawn back to the CRAC units or AHUs, where the hot air is cooled and conditioned.

Very High Density Data Center Mechanical System Design

This article’s author performed mechanical system designs for a server manufacture that started producing server racks at 30 kW per rack and was developing server racks at 50 kW per rack. After assembly, these server racks were rolled into an existing 200-rack-capacity data center cold-aisle/hot-aisle configured space for testing and burn-in. The existing test and burn-in facility was serviced by three 130-ton packaged rooftop air-handling units (RTUs), three 50-ton RTUs, and was originally designed for 10 kW per rack server racks.

Though there were substantial challenges in upgrading the manufacturing and testing facility electrical service to accommodate the higher server loads, this article will focus on the challenges of upgrading the existing mechanical cooling systems to meet the higher server loads. The previously raised questions will be answered based upon the analysis for this specific project and an analysis for a different project may result in slightly different results.

A. Can an all air cooling HVAC system meet the 25-50 kW per rack power density?

For the test and burn-in facility, all the HVAC equipment had to be roof-mounted, which limited the number of packaged air-handling units to the available roof area (3,800 square feet) above the test and burn-in facility. With the reconfiguration of the existing 130-ton RTUs, removal of the 50-ton RTUs, and addition of six 130-ton RTUs, the total test and burn-in facility cooling capacity became 1,170 tons, which translated to meeting a server rack power density of 20 kW per rack. For this facility, an air-cooled-only solution would not meet either the 30 kW per rack or 50 kW per rack power density cooling requirement.

The existing test and burn-in space had a 20-foot-tall roof deck with overhead cabling and power racks. With each 130-ton RTU supplying 36,000-cfm air to the test and burn-in facility, the air change rate per hour (ACH) was 250. The typical data center ACH is in the 30-50 range. This 250 air change rate is more in-line with a cleanroom than a data center, and careful airflow distribution design needs to be performed to prevent data center hot spots.

For the test and burn-in facility, an all-air-cooling solution would not be practical in providing efficient heat removal for data centers having servers with power density of 30-50 kW per rack.

B. What are the practical power density limitations of an all-air-cooled data center HVAC system?

As previously described, utilizing an all-air-cooling HVAC system for a data center with 20 kW per rack power density is possible but not necessarily the best technical solution. An all-air-cooling HVAC system is technically a better fit for data centers with server power densities up to 10-12 kW per rack.

C. What is the most efficient and cost-effective mechanical cooling system to use for high power density data centers?

In the past, routing cooling water into data center server spaces was to be avoided. However, the best heat dissipation technical solution for high power density server racks is water cooling. The most efficient method for heat dissipation is getting the cooling water as close as possible to the heat source. The three water cooling heat dissipation methods to be reviewed include: in-row, in-rack, and direct-to-chip.

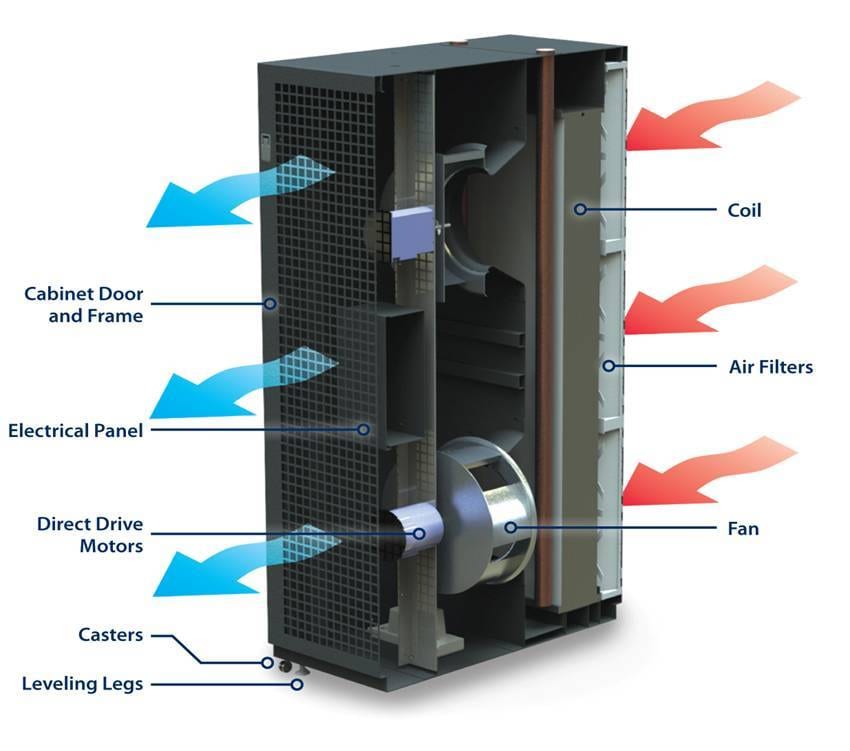

- In-Row Based Cooling — In-row cooling consists of air conditioning units (ACUs) embedded in the same row with the server racks (see Figures 2 and 3). The in-row ACU draws air from the hot aisle, conditions the air, and supplies cold air to the cold aisle. In-row ACUs have cooling capacities in the 65-75 kW range when served by a chilled water system and the 35-40 kW range when served by refrigerant system. The in-row cooling can be the single source of data center heat dissipation or can be combined with a traditional raised-floor CRAC or ducted air conditioning system.

FIGURE 1. A typical lower power density data center.

Images courtesy of WSP USA

In-row ACUs provide cooling in close proximity to the heat source; in-row ACUs can be added or subtracted to match actual server cooling requirements, resulting in better environmental space conditioning; lower fan energy; and more efficient heat dissipation that contributes to a lower power usage effectiveness (PUE). The chilled water system can be designed to meet any of the four tier reliability levels.

The disadvantages of in-row ACUs are they take floor space that would normally be used by servers. Additionally, implementing Tier III or Tier IV data center reliability requirements becomes difficult without doubling the number of ACUs, which further reduces the number of available spaces for servers.

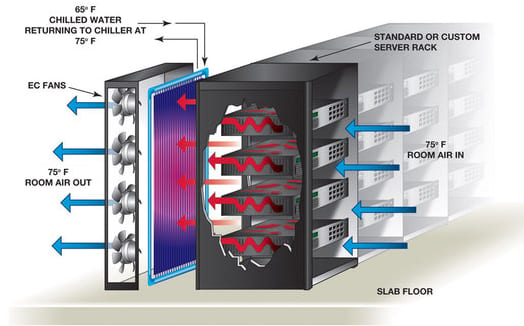

FIGURE 2. The in-row ACU draws air from the hot aisle, conditions the air, and supplies cold air to the cold aisle.

In-Rack Based Cooling

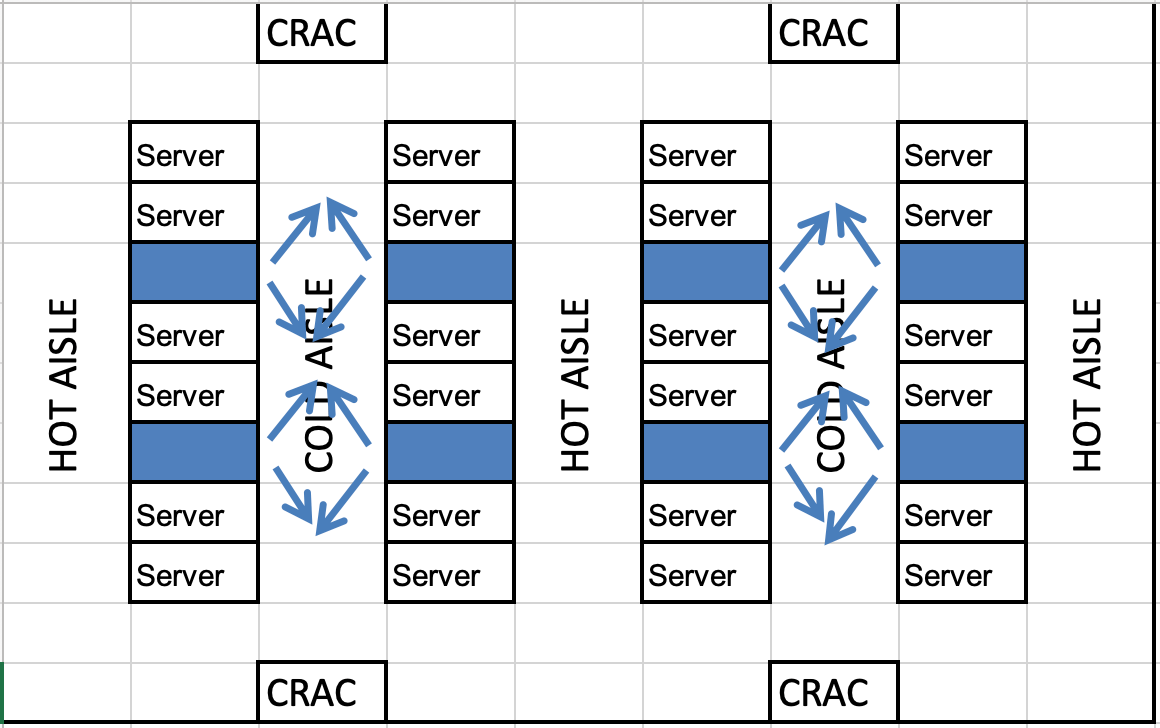

In-rack cooling consists of ACUs being directly attached to the back of the server racks (see Figures 4 and 5). The in-rack ACU draws hot air directly from the server fan discharge, conditions the air, and discharges it into the aisle. The in-rack ACU fans are designed to draw the same or more air than the server fan airflows. Depending upon the server power density and the in-rack cooling capacity, the in-rack cooling can meet the servers’ entire heat dissipation requirements or the majority of the required heat dissipation. Like in-row cooling, in-rack cooling can be combined with a raised-floor CRAC or ducted air conditioning system. If the in-rack cooling meets the entire server heat load, the servers do not necessarily need to be arranged in a cold aisle/hot aisle configuration.

FIGURE 3. An in-row cooling data center.

In-rack ACUs provide cooling at the heat source, are directly attached to the server, and are added or subtracted with the server. They offer lower fan energy and more efficient heat dissipation that contributes to a lower PUE and faster dissipated heat. The cooling capacity is sized to match the actual server load, which is another advantage, enabling better space environmental control. The chilled water system can be designed to meet any of the four tier reliability levels.

The disadvantages of in-rack ACUs are each server rack has a dedicated ACU, which is a higher cost installation. If a specific ACU fails, the specific server rack will need to be shut down until the ACU can be repaired or replaced. There will be difficulty in implementing Tier III or Tier IV data center reliability requirements at the server level.

FIGURE 4. In-rack cooling consists of ACUs being directly attached to the back of the server racks.

Image courtesy of Motivair

Direct-to-Chip Cooling

Direct-to-chip cooling has chilled water being piped directly to a chip cooling heat exchanger inside the server rack. The direct-to-chip method is combined with a typical raised-floor CRAC or ducted air conditioning system.

Direct-to-chip cooling offers heat dissipation at the primary heat source, lower fan energy, more efficient heat dissipation that contributes to a lower PUE, and faster heat dissipation. Additionally, the cooling capacity is sized to match the actual chip heat dissipation load.

The disadvantage of direct-to-chip cooling is that if a specific direct-to-chip heat exchanger fails, the specific server rack will need to be shut down until the direct-to-chip heat exchanger can be repaired or replaced.

Future Challenges for Very High Density Data Centers

Very high density data centers are going to face many challenges in the coming years. These challenges will stress the industry and lead to its transformation. Future challenges include:

Decarbonization and Sustainability — Data center infrastructure energy efficiency was a major focus with PUE values being a major indicator of a data center’s energy efficiency. The problem with the PUE is its focus on the support infrastructure efficiency and not the overall IT equipment efficiency. Future data center energy efficiencies need to include IT equipment energy efficiencies.

There needs to be a sustainability metric developed that takes into account the environmental impact of manufacturing servers and their associated chips. The challenge is developing servers and chips with alternative materials that have a smaller environmental footprint. The data center industry also needs to develop an overall decarbonization philosophy.

Electrical Utility Infrastructure — With higher server power density, there is a substantially higher electrical utility requirement. For the test and burn-in facility, the site power needed to be increased 6 MW. Unfortunately, the utility company stated it did not have the system capacity to provide the additional 6 MW of electrical service.

More municipalities and utility companies are establishing restrictions and moratoriums against new data centers due to the impact data centers have on the overall utility infrastructure. Very high power density data centers need to work with the local municipalities and utilities to reduce their overall impact to the utility infrastructure and be willing to contribute to upgrading the local infrastructure.

Technically Talented Staff — The labor market for technically talented staff is becoming tighter. Data centers will find it tougher to hire the required trained and experienced staff to run and maintain their data centers. Companies will need to be proactive in developing college co-op and intern programs and support other training avenues.

FIGURE 5. An in-rack cooling data center.

Final Thoughts

In-row, in-rack, and direct-to-chip cooling systems are capable of meeting higher server rack power densities. While it is difficult to design a reliable Tier III or IV system, a mechanical failure will be isolated to a specific server rack or row of server racks.

The industry will be facing very challenging times in the future, but with creativity and positive action, there will be a positive transformation.

References

- Data Center Site Infrastructure Tier Standard: Topology; Uptime Institute, October 1, 2018.

- Critical Facility Mechanical Equipment and System Reliability, Engineered Systems magazine, March 29, 2013,

- Data Centres: an introduction to concepts and design, The Chartered Institution of Building Services Engineers, August 2012.

- Energy Standard for Data Centers, ASHRAE Standard 90.4 – 2019.

- How do I Improve My PUE Ratio?, Sunbird Software Inc., March 9, 2022.

- What’s is PUE (Power Effectiveness) and how is it Calculated?, Raritan, Jeanne Ziobro, October 13, 2014.

- Data center PUEs flat since 2013, Uptime Institute, Andy Lawrence, April 27, 2020.

- Liquid Cooling Guidelines for Datacom Equipment Centers, ASHRAE Datacom Series 4, Second Edition, 2014.

- Silicon Heatwave: The Looming Change in Data Center Climates, Daniel Bizo, Uptime Institute, August 1, 2022.

- ASHRAE’s Data Center Thermal Guidelines – Air-Cooled Evolution, David Quirk, ASHRAE, May 2022.

- Understanding the Interplay Between Data Center Power Consumption/Data Center Energy Consumption and Power Density, Datacenter.Com, Bob West, August 29, 2018.

- Five Data Center Predictions for 2023, Uptime Institute, January 2023.

Vincent Sakraida, P.E., LEED AP

Vincent Sakraida is vice president and director of mechanical engineering with WSP USA. He boasts more than 40 years of experience in the design, construction, and operation of cleanroom, data center, pharmaceutical, laboratory, and other high-technology facilities. He is also a member of ASHRAE. Contact him at bsme82@comcast.net.

[Nikada]/[iStock / Getty Images Plus] via Getty Images